PRICURE

PRICURE

Abstract

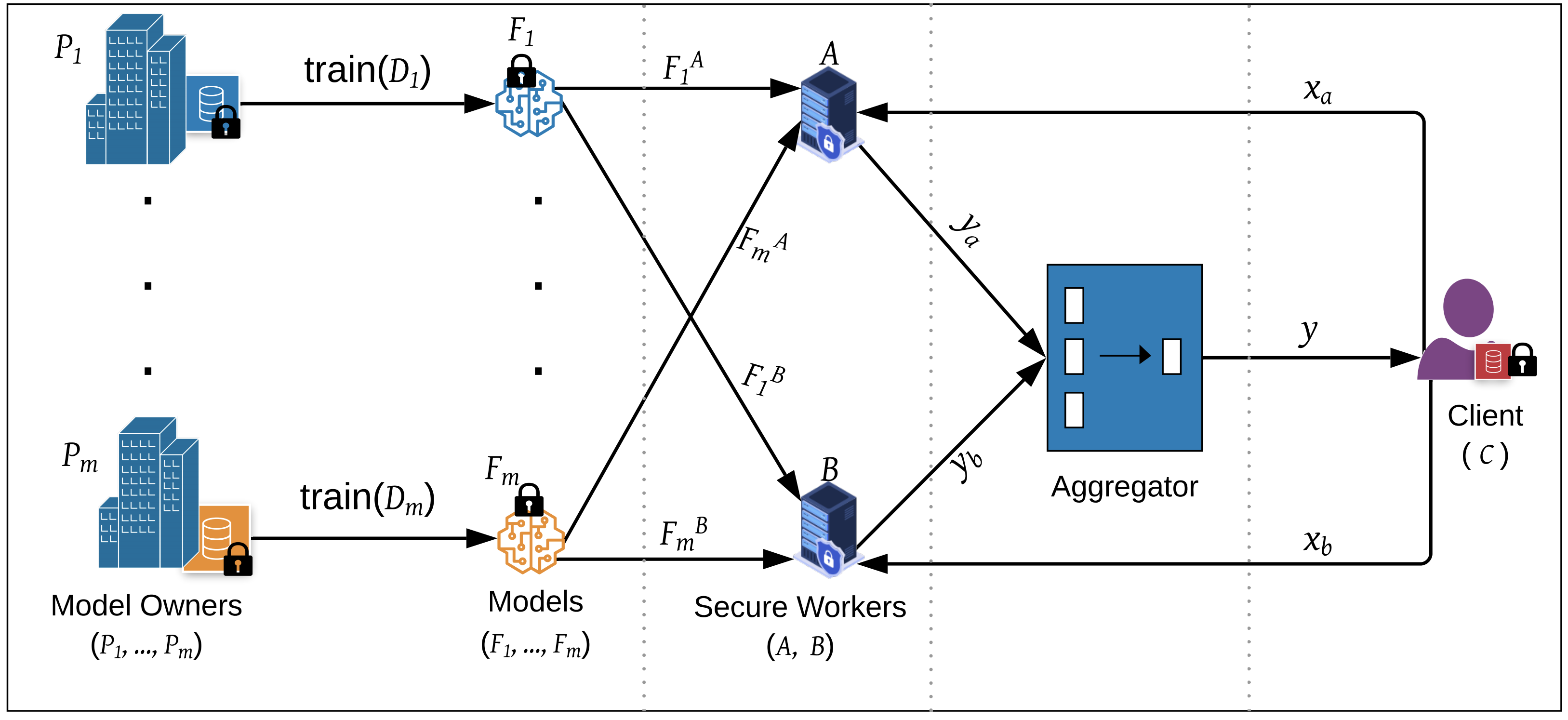

When multiple parties that deal with private data aim for a collaborative prediction task such as medical image classification, they are often constrained by data protection regulations and lack of trust among collaborating parties. If done in a privacy-preserving manner, predictive analytics can benefit from the collective prediction capability of multiple parties holding complementary datasets on the same machine learning task. This paper presents PRICURE, a system that combines complementary strengths of secure multi-party computation (SMPC) and differential privacy (DP) to enable privacy-preserving collaborative prediction among multiple model owners. SMPC enables secret-sharing of private models and client inputs with non-colluding secure servers to compute predictions without leaking model parameters and inputs. DP masks true prediction results via noisy aggregation so as to deter a semi-honest client who may mount membership inference attacks. We evaluate PRICURE on neural networks across four datasets including benchmark medical image classification datasets. Our results suggest PRICURE guarantees privacy for tens of model owners and clients with acceptable accuracy loss. We also show that DP reduces membership inference attack exposure without hurting accuracy.